Yessss!!! I've been anticipating this for a long time: real-time raytraced high-quality dynamic global illumination which is practical for games. Until now, the image quality of every real-time raytracing demo that I've seen in the context of a game was deeply disappointing:

- Quake 3 raytraced (http://www.youtube.com/watch?v=bpNZt3yDXno),

- Quake 4 raytraced (http://www.youtube.com/watch?v=Y5GteH4q47s),

- Quake Wars raytraced (http://www.youtube.com/watch?v=mtHDSG2wNho) (there's a pattern in there somewhere),

- Outbound (http://igad.nhtv.nl/~bikker/projects.htm),

- Let there be light (http://www.youtube.com/watch?v=33yrCV25A14,

- the last Larrabee demo showing an extremely dull Quake Wars scene (a raytraced floating boat in a mountainous landscape, with some flying vehicles roaring over, Intel just showed a completely motionless scene, too afraid of revealing the low framerate when navigating)http://www.youtube.com/watch?v=b5TGA-IE85o,

- the Nvidia demo of the Bugatti at Siggraph 2008 (http://www.youtube.com/watch?v=BAZQlQ86IB4)

All of these demo's lack one major feature: realtime dynamic global illumination. They just show Whitted raytracing, which makes the lighting look flat and dull and which quality-wise cannot seriously compete with rasterization (which uses many tricks to fake GI such as baked GI, SSAO, SSGI, instant radiosity, precomputed radiance transfer and sperical harmonics, Crytek's light propagation volumes, ...).

The above videos would make you believe that real-time high quality dynamic GI is still out for an undetermined amount of time. But as the following video shows, that time is much closer than you would think: http://www.youtube.com/watch?v=dKZIzcioKYQ

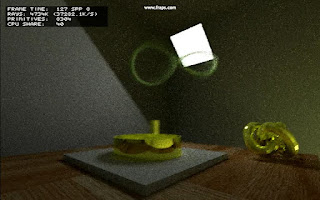

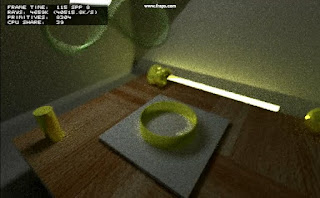

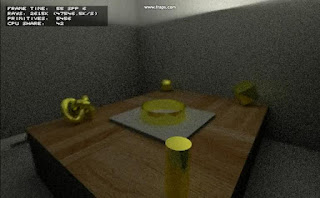

The technology demonstrated in the video is developed by Jacco Bikker (Phantom on ompf.org, who also developed the Arauna game engine which uses realtime raytracing) and shows a glimpse of the future of graphics: real-time dynamic global illumination through pathtracing (probably bidirectional), computed on a hybrid architecture (CPU and GPU) achieving ~40 Mrays/sec on a Core i7 + GTX260. There's a dynamic floating object and each frame accumulates 8 samples/pixel before being displayed. There's caustics from the reflective ring, cube and cylinder as well as motion blur. The beauty of path tracing is that it inherently provides photorealistic graphics: there's no extra coding effort required to have soft shadows, reflections, refractions and indirect lighting, it all works automagically (it also handles caustics, but not very efficiently though). The photorealism is already there, now it's just a matter of speeding it up through code optimization, new algorithms (stochastic progressive photon mapping, Metropolis Light Transport, ...) and of course better hardware (CPU and GPU).

The video is imo a proof of concept of the feasibility of realtime pathtraced games: despite the low resolution, low framerate, low geometric complexity and the noise there is an undeniable beauty about the unified global lighting for static and dynamic objects. I like it very, very much. I think a Myst or Outbound-like game would be ideally suited to this technology: it's slow paced and you often hold still for inspecting the scene looking for clues (so it's very tolerant to low framerates) and it contains only a few dynamic objects. I can't wait to see the kind of games built with this technology. Photorealistic game graphics with dynamic high-quality global illumination for everything are just a major step closer to becoming reality.

The video is imo a proof of concept of the feasibility of realtime pathtraced games: despite the low resolution, low framerate, low geometric complexity and the noise there is an undeniable beauty about the unified global lighting for static and dynamic objects. I like it very, very much. I think a Myst or Outbound-like game would be ideally suited to this technology: it's slow paced and you often hold still for inspecting the scene looking for clues (so it's very tolerant to low framerates) and it contains only a few dynamic objects. I can't wait to see the kind of games built with this technology. Photorealistic game graphics with dynamic high-quality global illumination for everything are just a major step closer to becoming reality.

UPDATE: I've found a good mathematical explanation for the motion blur you're seeing in the video, that was achieved by averaging the samples of 4 frames (http://www.reddit.com/r/programming/comments/brsut/realtime_pathtracing_is_here/):

it is because there is too much variance in lighting in this scene for the numbers of samples the frames take to integrate the rendering equation (8; typically 'nice' results starts at 100+ samples/per pixel). Therefore you get noise which (if they implemented their pathtracer correctly) is unbiased. Which means in turn that the amount of noise is proportional to the inverse of the square of number of samples. By averaging over 4 frames, they half the noise as long as the camera is not moving.

UPDATE2: Jacco Bikker uploaded a new, even more amazing video to youtube showing a rotating light globally illuminating the scene with path tracing in real-time at 14-18 fps (frame time: 55-70 ms)!

http://www.youtube.com/watch?v=Jm6hz2-gxZ0&playnext_from=TL&videos=ZkGZWOIKQV8

The frame averaging trick must have been used here too, because 6 samples per pixel cannot possibly give such good quality.

.jpg)

.jpg)

.jpg)

.gif)

No comments:

Post a Comment